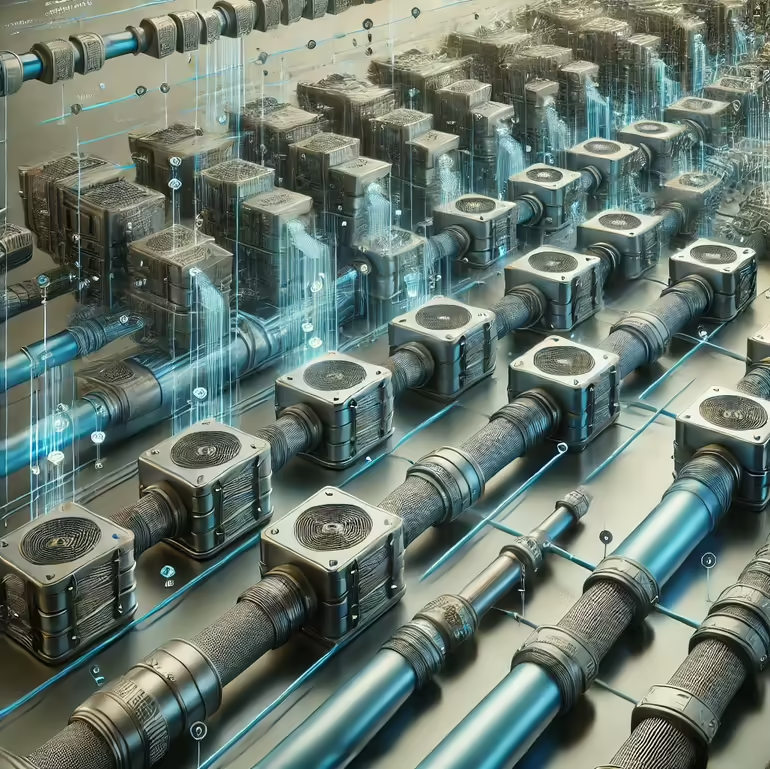

Pipes and Filters:

A Modular Approach to Streamlining Software Design

In today’s fast-paced, data-driven world, software systems need to be both flexible and scalable. Enterprises are constantly searching for efficient, reliable ways to handle complex data processing. One pattern that addresses these challenges head-on is the Pipes and Filters architectural pattern.

The Pipes and Filters pattern breaks down a task into a series of individual processing steps (filters) connected by data channels (pipes). It is a modular approach that allows each filter to work independently, performing a specific transformation on the data before passing it on to the next step. By dividing data processing into manageable, reusable components, organizations can achieve greater flexibility, scalability, and maintainability in their software systems.

For both B2C candidates looking to expand their technical expertise and B2B enterprise leaders aiming to optimize their infrastructure, understanding and leveraging the Pipes and Filters pattern can unlock tremendous potential in software design. Curate Partners not only excels in consulting services to implement such patterns but also specializes in finding the right talent to fill your organization’s staffing needs, ensuring seamless integration of these advanced architectural solutions.

What is the Pipes and Filters Pattern?

At its core, the Pipes and Filters pattern is a design strategy that organizes complex processing tasks into smaller, isolated units. It involves breaking down data processing into a pipeline of sequential steps, where each step (filter) is responsible for a specific transformation or operation. These filters are then connected by data channels (pipes) that pass the processed data to the next filter in the sequence.

In simpler terms, imagine a factory where each machine (filter) performs a single operation on a product, such as cutting, painting, or polishing. Once a machine completes its task, it sends the product down a conveyor belt (pipe) to the next machine. This modular approach allows for flexibility in how the machines are organized or reconfigured. The same concept applies to software design using Pipes and Filters, where data flows through a series of processing steps to achieve a desired outcome.

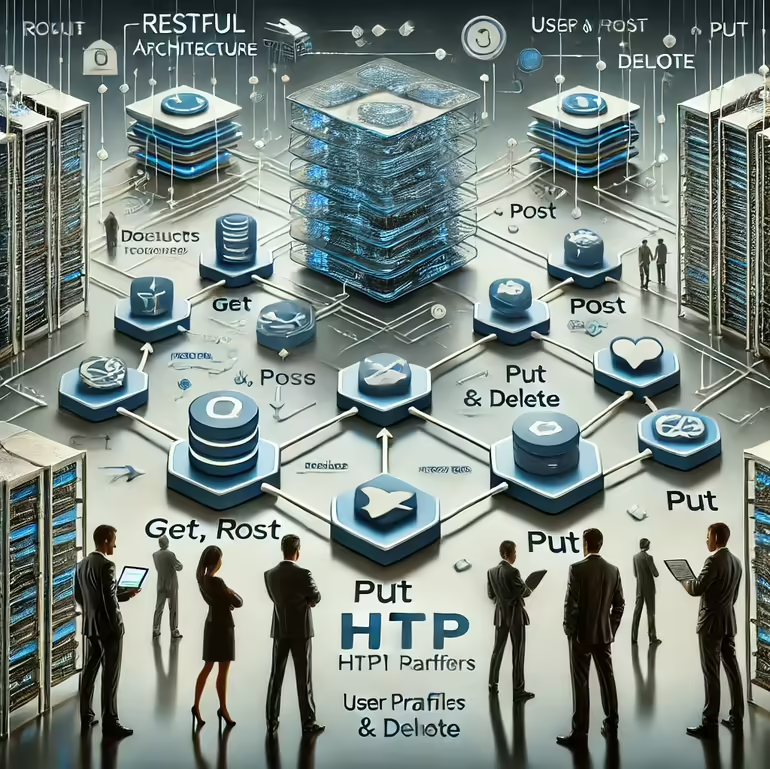

Components of the Pipes and Filters Pattern

Understanding the key components of the Pipes and Filters pattern is crucial for grasping how this architecture works and why it is so effective:

Filters:

Filters are the individual processing components that perform specific operations on the data. Each filter is designed to have a single responsibility, such as transforming, enriching, or validating data. These filters are independent modules, making them reusable and easily maintainable.Pipes:

Pipes are the connectors that link filters together. They facilitate the flow of data between filters. Whether using simple data structures, streams, or queues, pipes ensure that data flows in sequence from one filter to the next.Data Transformation:

As data moves through the pipeline, each filter transforms it in some way. The transformation can range from simple modifications to complex restructuring, depending on the business requirements. The order of filters determines the final output.Decoupling:

Filters are loosely coupled, meaning that they don’t have direct knowledge of each other. Instead, they communicate through the pipes, reducing dependencies and promoting flexibility. This decoupling enables independent development, testing, and modification of filters.Flexibility:

One of the greatest advantages of the Pipes and Filters pattern is its flexibility. Filters can be added, removed, or reordered to adapt to changing requirements. Whether optimizing for performance or responding to new business needs, this architectural pattern supports a dynamic approach to software development.

Why Should Enterprises Adopt Pipes and Filters?

The Pipes and Filters pattern offers several key benefits that align with the needs of modern enterprises. Whether you’re building data processing systems or integrating complex workflows, this architectural pattern provides the flexibility, modularity, and scalability needed to meet diverse challenges.

1. Modularity

Modularity is one of the hallmark strengths of the Pipes and Filters pattern. Each filter is self-contained, with a single responsibility, allowing developers to work on individual filters without affecting the rest of the pipeline. This modularity makes it easier to test, maintain, and reuse filters in different pipelines or projects. For enterprises managing multiple products or services, this is a critical advantage.

2. Scalability

Filters in the pipeline can be parallelized or distributed across different systems to handle larger volumes of data. For example, a filter processing video data could be scaled to run on multiple machines, each handling a different part of the dataset. This parallelism ensures that systems remain efficient and responsive, even as data processing demands grow.

3. Flexibility

One of the main appeals of the Pipes and Filters pattern is its adaptability. Filters can be reordered, removed, or replaced without overhauling the entire system. This reconfigurability allows enterprises to quickly adapt to changing market conditions or internal requirements.

4. Maintainability

Because each filter is an independent unit, maintaining the pipeline is far simpler than with tightly coupled systems. If a filter encounters an issue, developers can isolate and fix the problem without impacting the entire system. This ensures smoother operations and reduces downtime.

5. Testability

Since filters are designed as independent units, they can be unit tested in isolation. This simplifies the testing process, leading to higher-quality software and reducing the chances of bugs slipping into production.

Common Use Cases

The Pipes and Filters pattern has broad applicability across various industries. Some common use cases include:

Data Processing Pipelines: In industries dealing with massive amounts of data, such as finance or healthcare, this pattern is ideal for creating efficient ETL (Extract, Transform, Load) processes. Each filter handles specific data transformation tasks, ensuring clean, accurate outputs.

Image and Video Processing: From video editing to real-time streaming services, this pattern allows different filters to apply various transformations to media data. Filters can be specialized for tasks like resizing, color correction, or adding effects.

Text Processing: Text analysis, natural language processing (NLP), and search engine algorithms can benefit from the Pipes and Filters pattern. Filters can handle tasks like tokenization, stemming, or sentiment analysis.

Network Protocols and Data Validation: For systems dealing with network protocols or data transmission, the Pipes and Filters pattern allows for efficient filtering and validation of incoming and outgoing data packets, ensuring secure and reliable communications.

How Curate Partners Can Help

At Curate Partners, we understand that implementing patterns like Pipes and Filters requires both technical expertise and specialized talent. As a consulting service that focuses on advanced architecture and software solutions, we partner with organizations to design, implement, and optimize modular architectures that meet your specific needs.

Finding the Right Talent:

The success of any software architecture hinges on the quality of the team behind it. Curate Partners excels at identifying and recruiting specialized talent—developers, architects, and technical consultants—who are skilled in implementing architectural patterns like Pipes and Filters. Whether you need temporary staffing to support an ongoing project or a long-term hire, we connect you with professionals who have the exact skills you require.

Custom Consulting Solutions:

In addition to staffing, we offer tailored consulting services to help your organization fully leverage the potential of the Pipes and Filters pattern. From architecture design to pipeline optimization, our experts work closely with your internal teams to ensure that your systems are modular, scalable, and future-proof.

Conclusion

The Pipes and Filters architectural pattern provides a robust, modular approach to processing data. Its flexibility, scalability, and maintainability make it a valuable tool for organizations aiming to build efficient, future-ready software systems. Whether you’re an enterprise leader seeking to improve your systems or a candidate looking to expand your expertise in modern software architecture, mastering Pipes and Filters is a critical step.